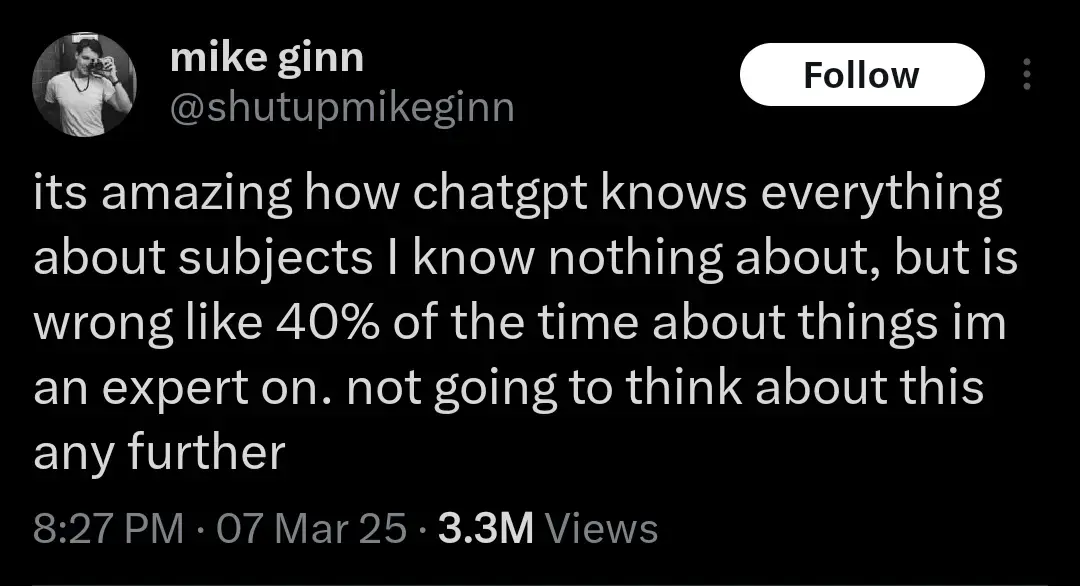

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let’s not think about that either. AI Bad!

This is a salient point that’s well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It’s super easy to call out a bad research study and have it retracted. But you can’t just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they’re synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

I’ll bait. Let’s think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators (“might”, “under such and such circumstances” etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It’s more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that’s how you want to spend your time, hey knock yourself out.

Lol. Be my guest and knock yourself out, dreaming you know things

-

AI Bad!

Yes, it is. But not in, like a moral sense. It’s just not good at doing things.

deleted by creator

Bath Salts GPT

TIL becoming dependent on a tool you frequently use is “something bizarre” - not the ordinary, unsurprising result you would expect with common sense.

I know a few people who are genuinely smart but got so deep into the AI fad that they are now using it almost exclusively.

They seem to be performing well, which is kind of scary, but sometimes they feel like MLM people with how pushy they are about using AI.

I knew a guy I went to rehab with. Talked to him a while back and he invited me to his discord server. It was him, and like three self trained LLMs and a bunch of inactive people who he had invited like me. He would hold conversations with the LLMs like they had anything interesting or human to say, which they didn’t. Honestly a very disgusting image, I left because I figured he was on the shit again and had lost it and didn’t want to get dragged into anything.

Its too bad that some people seem to not comprehend all chatgpt is doing is word prediction. All it knows is which next word fits best based on the words before it. To call it AI is an insult to AI… we used to call OCR AI, now we know better.

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things. I got an AI search response just yesterday that dramatically understated an issue by citing an unscientific ideologically based website with high interest and reason to minimize said issue. The actual studies showed a 6x difference. It was blatant AF, and I can’t understand why anyone would rely on such a system for reliable, objective information or responses. I have noted several incorrect AI responses to queries, and people mindlessly citing said response without verifying the data or its source. People gonna get stupider, faster.

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things

Ahem. Weren’t there an election recently, in some big country, with uncanny similitude with that?

Yeah. Got me there.

That’s why I only use it as a starting point. It spits out “keywords” and a fuzzy gist of what I need, then I can verify or experiment on my own. It’s just a good place to start or a reminder of things you once knew.

An LLM is like taking to a rubber duck on drugs while also being on drugs.

Same type of addiction of people who think the Kardashians care about them or schedule their whole lives around going to Disneyland a few times a year.

Not a lot of meat on this article, but yeah, I think it’s pretty obvious that those who seek automated tools to define their own thoughts and feelings become dependent. If one is so incapable of mapping out ones thoughts and putting them to written word, its natural they’d seek ease and comfort with the “good enough” (fucking shitty as hell) output of a bot.

I need to read Amusing Ourselves to Death…

My notes on it https://fabien.benetou.fr/ReadingNotes/AmusingOurselvesToDeath

But yes, stop scrolling, read it.

I tried that Replika app before AI was trendy and immediately picked on the fact that AI companion thing is literal garbage.

I may not like how my friends act but I still respect them as people so there is no way I’ll fall this low and desperate.

Maybe about time we listen to that internet wisdom about touching some grass!

I mean, I stopped in the middle of the grocery store and used it to choose best frozen chicken tenders brand to put in my air fryer. …I am ok though. Yeah.

That’s… Impressively braindead

That’s the joke!

At the store it calculated which peanuts were cheaper - 3 pound of shelled peanuts on sale, or 1 pound of no shell peanuts at full price.

New DSM / ICD is dropping with AI dependency. But it’s unreadable because image generation was used for the text.

Neat snaps camera

Neat

You can tell by the way that it is!

It’s not often you get all this neatness in one place