- 10 Posts

- 26 Comments

5·2 years ago

5·2 years agoYou can follow Lemmy communities from Mastadon but not the other way around. And it doesn’t work well anyway.

You can use Kbin for both Lemmy and Mastadon.

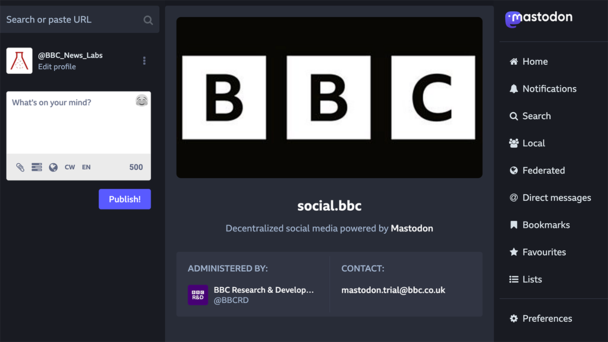

But Mastadon content is not very Lemmy-like so if all you want is the BBC toots, but not a Mastadon account, you might as well bookmark bbc.social and scroll it occasionally.

1·2 years ago

1·2 years agoRead it yourself, but think it through this time.

1·2 years ago

1·2 years agoAnd having seen your edit of the OP, I quit Facebook something like 15 years ago and only ever had fake name accounts.

I quit Twitter the day Musk took over. I quit Reddit the night before it went dark. I’ve been boycotting Google as much as is possible for well over a decade.

Have I polished my halo enough for you to stop sneering and start growing up?

FFS

5·2 years ago

5·2 years agoI have trouble believing that humans can’t get by without meat, or cars, or carbon fuel, or mass-produced clothes, or supermarkets, or .

It does not matter what you believe, or what you prioritise. Other people have different beliefs and have made different choices. If you want them to think and choose differently, don’t start off by telling them that they’re scum while you polish your imaginary halo.

And for fucks sake don’t fill the Fediverse up with so much narcissistic, whiny crap that everyone who isn’t you fucks off somewhere else.

This is not hard.

10·2 years ago

10·2 years agoIf you’re going to drink that much sparkling water (as I do), invest in a Drinkmate or similar. It’s about as cheap as the very cheapest sparkling water but you end up with much, much less plastic to pretend to recycle.

16·2 years ago

16·2 years agoIndividual choices are constrained. Admonishing people for living in this world that we live in is straight from the Big Carbon playbook.

13·2 years ago

13·2 years ago#explore on Mastodon is a good way to find stuff you wouldn’t see on your own feed. (It’s how I found this article.)

And there are various bots that allow you to follow people on Twitter (birdsite.makeup etc). Although my instance has decided they don’t like that so it’s a bit harder to find them than it was.

But yes, I think the article does a good job of articulating the problems. I hope they get solved because there’s a lot I like very much about Mastodon but it does not have the depth and breadth of content (yet). And hashtags do not work well enough as a replacement for search (I followed #BBC to get more news in my feed and ended up with a bit of news and a lot of porn).

3·2 years ago

3·2 years agoOh. You think the OP wrote the link?

No. I’ll edit in some quote marks. Apologies for any confusion.

1·2 years ago

1·2 years agodeleted by creator

20·2 years ago

20·2 years agoEvery right-wing accusation is a confession.

3·2 years ago

3·2 years agoWithout a pass, you’d need a passport to prove eligibility. Not everyone owns a passport.

19·2 years ago

19·2 years agoYes. It’s happened to me and it is a head fuck. The email was from a business with a perfectly legit email address.

1·2 years ago

1·2 years agoThey don’t seem to list the instances they trawled (just the top 25 on a random day with a link to the site they got the ranking from but no list of the instances, that I can see).

We performed a two day time-boxed ingest of the local public timelines of the top 25 accessible Mastodon instances as determined by total user count reported by the Fediverse Observer…

That said, most of this seems to come from the Japanese instances which most instances defederate from precisely because of CSAM? From the report:

Since the release of Stable Diffusion 1.5, there has been a steady increase in the prevalence of Computer-Generated CSAM (CG-CSAM) in online forums, with increasing levels of realism.17 This content is highly prevalent on the Fediverse, primarily on servers within Japanese jurisdiction.18 While CSAM is illegal in Japan, its laws exclude computer-generated content as well as manga and anime. The difference in laws and server policies between Japan and much of the rest of the world means that communities dedicated to CG-CSAM—along with other illustrations of child sexual abuse—flourish on some Japanese servers, fostering an environment that also brings with it other forms of harm to children. These same primarily Japanese servers were the source of most detected known instances of non-computer-generated CSAM. We found that on one of the largest Mastodon instances in the Fediverse (based in Japan), 11 of the top 20 most commonly used hashtags were related to pedophilia (both in English and Japanese).

Some history for those who don’t already know: Mastodon is big in Japan. The reason why is… uncomfortable

I haven’t read the report in full yet but it seems to be a perfectly reasonable set of recommendations to improve the ability of moderators to prevent this stuff being posted (beyond defederating from dodgy instances, which most if not all non-dodgy instances already do).

It doesn’t seem to address the issue of some instances existing largely so that this sort of stuff can be posted.

12·2 years ago

12·2 years agoIt’s OK. Ordinary people will have no trouble at all making sure they use a different vehicle every time they drive their kid to college or collect an elderly relative for the holidays. This will only inconvenience serious criminals.

0·2 years ago

0·2 years agoConsent-o-matic is great but it does occasionally get stuck in an endless loop on particularly devious websites.

You can change a couple of settings in Firefox to deal with most (but not all) instantly.

0·2 years ago

0·2 years agoYou’re agreeing with me but using more words.

I’m more annoyed than upset. This technology is eating resources which are badly needed elsewhere and all we get in return is absolute junk which will infest the literature for decades to come.

0·2 years ago

0·2 years agoIn context. And that is exactly how they work. It’s just a statistical prediction model with billions of parameters.

0·2 years ago

0·2 years agoIt will almost always be detectable if you just read what is written. Especially for academic work. It doesn’t know what a citation is, only what one looks like and where they appear. It can’t summarise a paper accurately. It’s easy to force laughably bad output by just asking the right sort of question.

The simplest approach for setting homework is to give them the LLM output and get them to check it for errors and omissions. LLMs can’t critique their own work and students probably learn more from chasing down errors than filling a blank sheet of paper for the sake of it.

Just highlighting the exploitation of migrant workers here, like much of Twitter’s remaining workforce, apparently. It also reminded me of this story: The fishermen:

These people are fucking sick. The whole system that denies people the legal right to work just so they can be more easily exploited is fucking sick.

I’m going to go and punch some walls. Laters.