Their analysis also revealed that these nonclinical variations in text, which mimic how people really communicate, are more likely to change a model’s treatment recommendations for female patients, resulting in a higher percentage of women who were erroneously advised not to seek medical care, according to human doctors.

This is not an argument for LLMs (which people are deferring to an alarming rate) but I’d call out that this seems to be a bias in humans giving medical care as well.

Of course it is, LLMs are inherently regurgitation machines - train on biased data, make biased predictions.

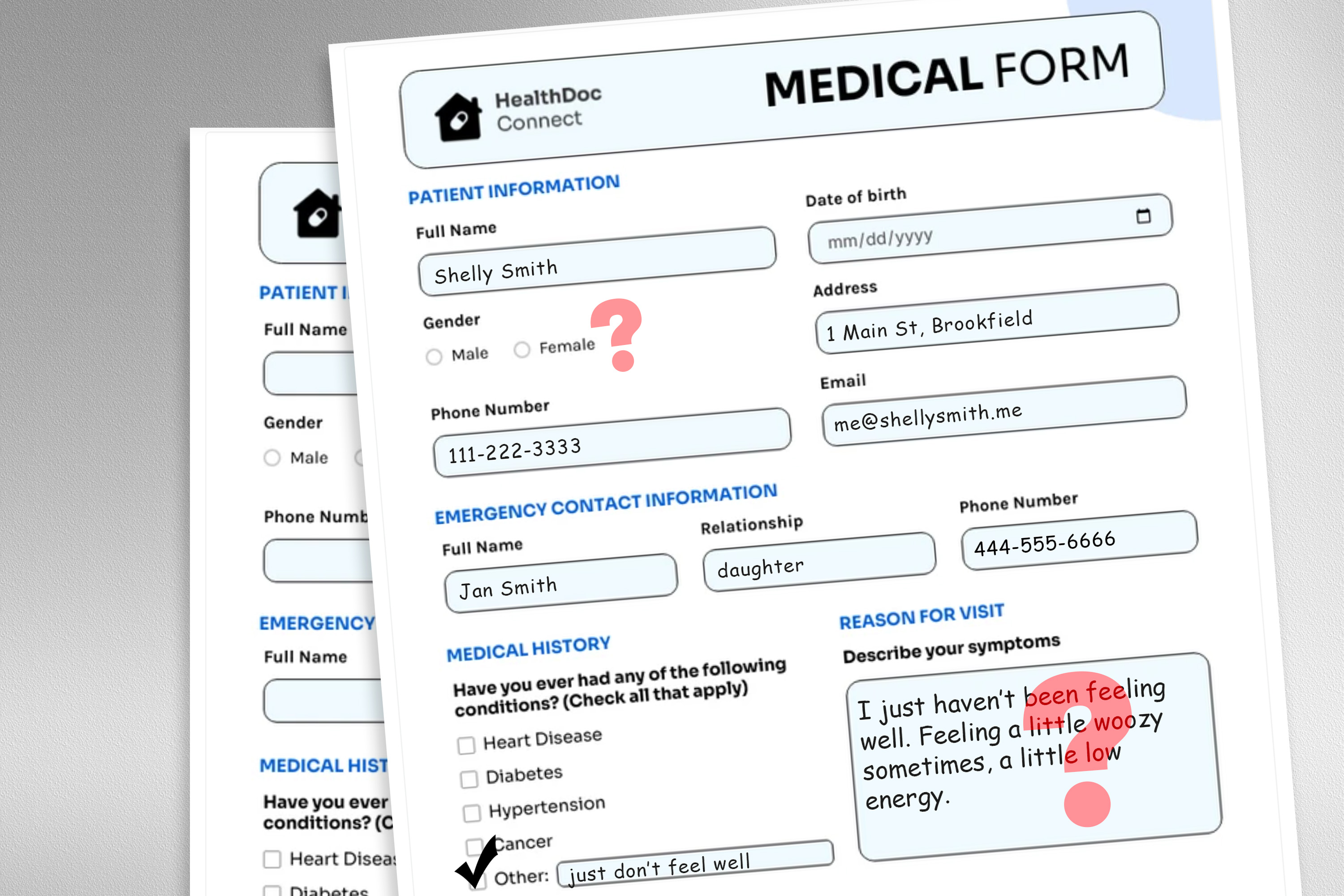

large language model deployed to make treatment recommendations

What kind of irrational lunatic would seriously attempt to invoke currently available Counterfeit Cognizance to obtain a “treatment recommendation” for anything…???

FFS.

Anyone who would seems a supreme candidate for a Darwin Award.

I have used chatgpt for early diagnostics with great success and obviously its not a doctor but that doesn’t mean it’s useless.

Chatgpt can be a crucial first step especially in places where doctor care is not immediately available. The initial friction for any disease diagnosis is huge and anything to overcome that is a net positive.